Building Cloud Functions That Scales

Performance and Scalability Tips for when starting a new project with Google Cloud Functions

I've been using Cloud Functions heavily for the last year, and I must say, I'm a fan, so in this article, we'll go through some tips to keep in mind starting a new project with Cloud Functions:

- File Structure

- Separate Logic From Trigger

- Unit Tests Are Still Relevant

- Logs, Don't forget about logs

- Lazy Load Dependencies

- Use Few and Use Popular

- Cache Initialized Google APIs Clients

- References and More Read

File Structure

File Structure is significant if you want your code to scale and be easily navigatable as you add more and more features.

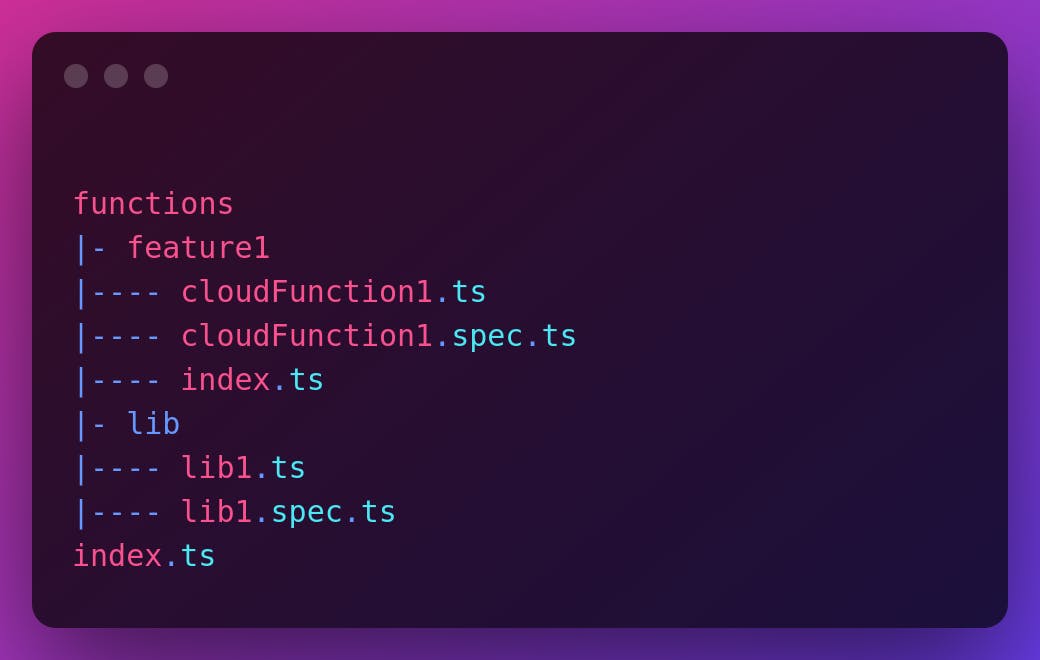

Personally, I find this file structure to be suitable for most projects, especially as you start developing cloud functions:

Where cloud functions are separated by modules(features) and each has its own file.

Under each module, there should be an index file that exports all module's cloud functions, so that the main index.ts file (that should export all cloud functions you are using) can import cloud functions you need from this module simple by path: ./functions/feature1.

lib is where code that is "packageable" should live, which has nothing to do with your framework, triggers, etc but contains framework-agnostic logic or package interfaces, the reason for this folder is that code under it can later be extracted as packages that could be used in multiple projects instead of copying the same logic everywhere.

Separate Logic From Trigger

It's best to write cloud functions as pure functions, it'll also be more testable and re-usable if you separate the trigger logic from the cloud function itself.

For example, Let's say you have a cloud function that creates a User Activity record and saves it in Firestore. If you start with an HTTP cloud function (invoked as a webhook) the code can be something like this:

export const createRecord = async (request, response) => {

const { userId, activity, timestamp } = request.body;

const createdRecord = ; // Firebase Create Document Logic

response.status(201).json({ createdRecord });

}

Then, later on, you'd like to create a User Activity record as well but instead of an HTTP call from a client, it'll be through a PubSub subscription which means that you'll have to Base64 decode body first to extract the message and get the record info.

You'll have to either copy the logic and specs or best to extract the main steps and abstract the context just passing relevant parameters, so I think it's best to go from the start with a separate function and an HTTP/PubSub adapter.

const createRecord = async (userId, activity, timestamp) => {

// Any Object Setup Logic

const createdRecord = ; // Firebase Create Document Logic

return createdRecord;

};

export const createRecordHttpTriggered = async (request, response) => {

const { userId, activity, timestamp } = request.body;

const createdRecord = await createRecord(userId, activity, timestamp);

response.status(201).json({ createdRecord });

};

export const createRecordPubSubTriggered = async (message, context) => {

const { userId, activity, timestamp } = JSON.parse(Buffer.from(message.data, 'base64').toString());

const createdRecord = await createRecord(userId, activity, timestamp);

return createdRecord;

};

Unit Tests Are Still Relevant

I don't think we need to go over why unit tests are important, but I have to confess when I started developing cloud functions I didn't cover it with unit tests from the start which I do regret now.

If you have separated functions from triggers then testing the main logic will be as easy as testing input and output pairs and corner cases in most functions, you can also test that your adapters extract the correct info and you're good to go.

Logs, Don't forget about logs

If you're using Google Cloud Functions (or Firebase Cloud Functions) you've already been hooked with Logger set up and ready to go, you should take advantage of that by using log levels e.g. console.debug, console.info, console.warn and console.error when appropriate and don't just depend on the main function invoked and function succeeded in x ms logs.

A good rule of thumb is to act as if your function is misbehaving and ask yourself what sort of info you'd need to debug it, you'll thank yourself later if you do so; I've learned that the hard way.

Lazy Load Dependencies

When a request comes into your function, if there are no already initialized instances a new instance is initialized, the time it takes to initialize a new instance and until it's ready to handle your request is called "Cold Start".

A good chunk of Cold Start time goes to evaluating the global context of your functions, so as you import more and more packages the time needed will increase even if you're not using that particular package in the cloud function you need to handle this request.

An example, given by this tutorail is that if you have two functions f1, and f2 as such:

const functions = require('firebase-functions');

const admin = require('firebase-admin');

admin.initializeApp();

exports.f1 = functions.http.onRequest((req, res) => {

// Uses Firebase Admin SDK

});

exports.f2 = functions.http.onRequest((req, res) => {

// Doesn't use Firebase Admin SDK

});

Even that the request is handled by f2 and it doesn't use the Firebase Admin SDK, it still needs to be imported first.

A quick solution for this is to lazy load dependencies inside the functions themselves. you can easily do so in Nodejs or Typescript using require or import functions, keep in mind that import is async.

Node:

function f2() {

const { sample } = require('lodash');

....

}

Typescript:

async function f1() {

const { default: Axios } = await import('axios');

.....

}

Use Few and Use Popular

Another way to decrease cold start time is to decrease the number of packages.

You should use as few packages as possible, if you're importing a package just for one function then you should consider copying that function to your codebase instead.

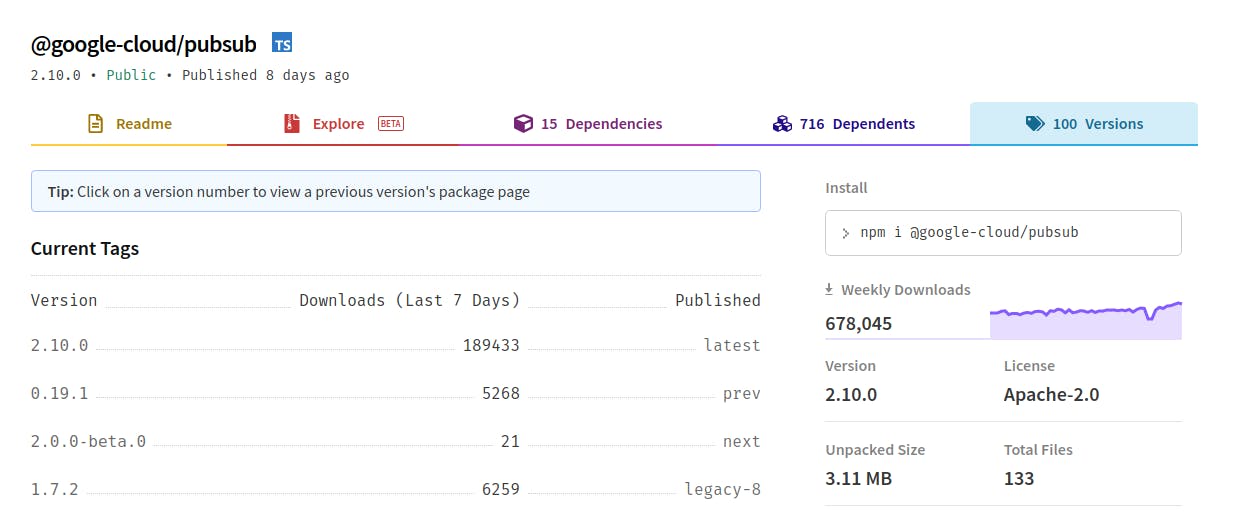

Also make sure that you're using the most popular package version, which means it'll have a higher probability to already exist in Google Cloud Packages Cache which will save time, you can check the versions tab in the package's npm page to get a sense of which version is downloaded the most.

Cache Initialized Google APIs Clients

While there's no guarantee, Cloud Functions often re-use the execution environment of an already initialized instance for a previous invocation.

so If you declare a variable in the global scope, its value can be reused in subsequent invocations without having to be recomputed. This way you can cache objects that may be expensive to recreate on each function invocation.

You can also optimize networking by caching initialized Google APIs Clients like PubSub for example to avoid unnecessary connections and DNS calls.

This can be joined with Lazy loading dependencies like so:

let initializedFirebaseApp;

const createFirebaseApp = async () => {

const admin = await import('firebase-admin');

.....

if (!initializedFirebaseApp) {

const firebaseApp = admin.initializeApp({

......

});

initializedFirebaseApp = firebaseApp;

}

return initializedFirebaseApp;

}